This research program originated in an NSERC/Canada Council for the Arts New Media Initiative, continued in collaborations with Jordan Deitcher, Hal Myers (Thought Technology), Stephen McAdams (Schulich School of Music) and Robert Zatorre (Montreal Neurological Institute), and is now being funded by a Networks of Centres of Excellence on Graphics, Animation, and New Media (GRAND). Participants include Regan Mandryk (Saskatoon), Alissa Antle (SFU), Magy Seif El-Nasr (SFU), Bernhard Riecke (SFU), Gitte Lindgard (Carleton), Heather O'Brien (UBC), Karon MacLean (UBC), Frank Russo (Ryerson), and Diane Gromala (SFU).

The goal of this project is to develop and validate a suite of reliable, valid, and robust quantitative and quqlitative, objective and subjective evaluation methods for computer game-, new media-, and animation environments that address the unique challenges of these technologies. Our work in these area at McGill spans biological and neurological processes involved in human psychological and physiological states, pattern recognition of biosignals for automatic psychophysiological state recognition, biologically inspired computer vision for automatic facial expression recognition, physiological responses to music, and stress/anxiety measurement using physiological data.

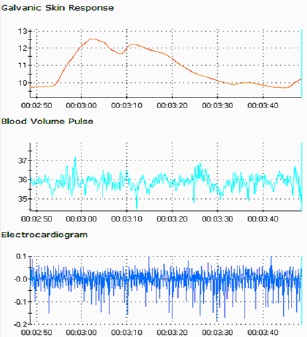

Our interest in the use of biosignals began with several explorations of live emotional mapping to a multimedia display, providing an external and directly accessible manifestation of the individual's emotions. Applications include augmented theatrical performance, therapy, or videoconference communication. The representation may be either connected with social conventions or more abstract. For example, when someone becomes angry, their visual representation might become red, or storm clouds might appear in the background, whereas if the individual is calm, the sounds of gentle waves might be heard quietly. For therapeutic applications, the representation could be used as "emotional feedback", similar to biofeedback approaches, but now using potentially more meaningful content. This work was also applied to characterizing individuals' excitement and stress levels in various activities, ranging from opera singing to interacting with various computer input devices.

We subsequently applied our expertise in biosignals analysis to a study involving the characterization of intensely pleasurable feelings induced by music, described as similar to those of extremely rewarding stimuli. This work included characterizing the body's physiological reactions to intensely pleasurable music; examining whether the reward areas of the brain are activated in response to pleasurable music; and finally, determining whether music, in itself, can lead to a release of potent rewarding neurochemicals despite the absence of an external basis for such release.

(video)

(video)

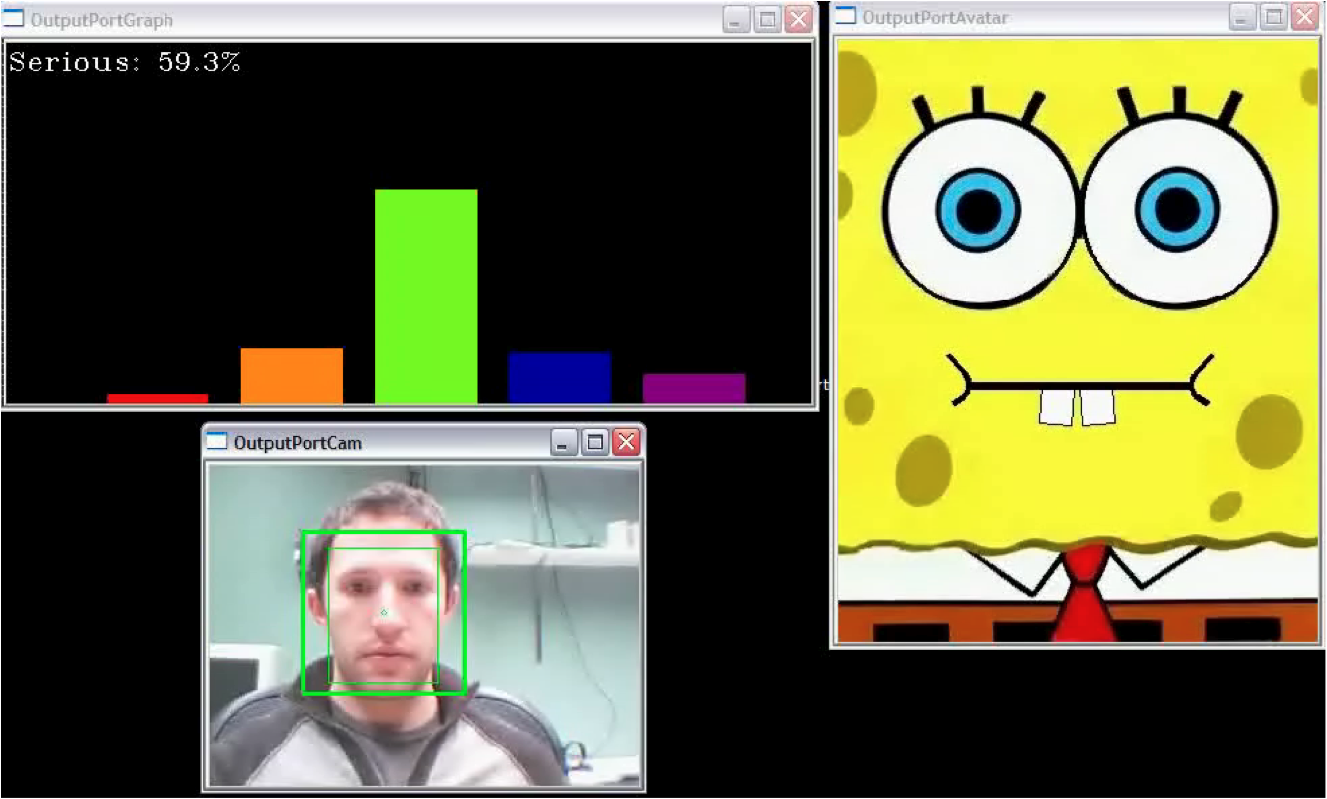

Since physically coupled biosignal sensors are not always appropriate, we also investigated emotion recognition from video analysis of facial expressions. One interesting application for this work is to treatment of autism spectrum disorder (ASD), for which affected individuals suffer an inability either to express personal feelings adequately or recognize emotions in other people. In this context, one potentially beneficial avenue of technologically assisted treatment is expressivity training through recognition of facial expressions. Using recognition of the five prototypical facial expressions (surprise, happy, serious, sad, disgust), we can synthesize an age-appropriate feedback display, for example, an "emotional mirror" of the individual, in which a well-liked character such as Sponge Bob responds in real-time by mimicking the user's expression.

Last update: 18 August 2010